Videos

See AI2's full collection of videos on our YouTube channel.Viewing 201-210 of 257 videos

Better Knowledge Graphs Through Probabilistic Graphical Models

August 22, 2016 | Jay PujaraAutomated question answering, knowledgeable digital assistants, and grappling with the massive data flooding the Web all depend on structured knowledge. Precise knowledge graphs capturing the many, complex relationships between entities are the missing piece for many problems, but knowledge graph construction is…

Exploiting Universal Grammatical Properties to Induce CCG Grammars

August 1, 2016 | Dan GarretteLearning NLP models from weak forms of supervision has become increasingly important as the field moves toward applications in new languages and domains. Much of the existing work in this area has focused on designing learning approaches that are able to make use of small amounts of human-generated data. In…

Open Information Extraction: Where Are We Going?

July 18, 2016 | Claudio Delli BoviThe Open Information Extraction (OIE) paradigm has received much attention in the NLP community over the last decade. Since the earliest days, most OIE approaches have been focusing on Web-scale corpora, which raises issues such as massive amounts of noise. Also, OIE systems can be very different in nature and…

Near-Optimal Adaptive Information Acquisition: Theory and Applications

July 15, 2016 | Yuxin ChenSequential information gathering, i.e., selectively acquiring the most useful data, plays a key role in interactive machine learning systems. Such problem has been studied in the context of Bayesian active learning and experimental design, decision making, optimal control and numerous other domains. In this talk…

Representing Meaning with a Combination of Logical and Distributional Models

June 21, 2016 | Katrin ErkAs the field of Natural Language Processing develops, more ambitious semantic tasks are being addressed, such as Question Answering (QA) and Recognizing Textual Entailment (RTE). Solving these tasks requires (ideally) an in-depth representation of sentence structure as well as expressive and flexible…

Relating Natural Language and Visual Recognition

June 20, 2016 | Marcus RohrbachLanguage is the most important channel for humans to communicate about what they see. To allow an intelligent system to effectively communicate with humans it is thus important to enable it to relate information in words and sentences with the visual world. For this a system should be compositional, so it is e.g…

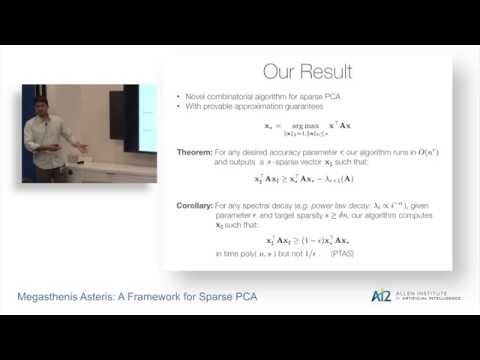

A Framework for Sparse PCA

June 13, 2016 | Megasthenis AsterisPrincipal component analysis (PCA) is one of the most popular tools for identifying structure and extracting interpretable information from datasets. In this talk, I will discuss constrained variants of PCA such as Sparse or Nonnegative PCA that are computationally harder, but offer higher data interpretability…

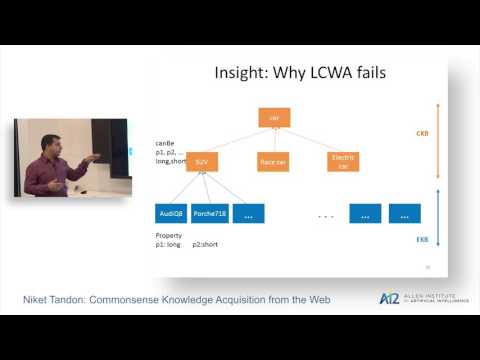

Commonsense Knowledge Acquisition from the Web

June 13, 2016 | Niket TandonThere is a growing conviction that the future of computing will crucially depend on our ability to exploit Big Data on the Web to produce significantly more intelligent and knowledgeable systems. This includes encyclopedic knowledge (for factual knowledge) and commonsense knowledge (for more advanced human-like…AI for the Common Good

May 23, 2016 | Oren EtzioniOren Etzioni, CEO of the Allen Institute for AI, shares his vision for deploying AI technologies for the common good.

Efficiently Learning and Applying Dense Feature Representations for NLP

May 17, 2016 | Yi YangWith the resurgence of neural networks, low-dimensional dense features have been used in a wide range of natural language processing problems. Specifically, tasks like part-of-speech tagging, dependency parsing and entity linking have been shown to benefit from dense feature representations from both efficiency…