Diversity, Equity, & Inclusion

“Core to AI2 is our aim to advance the field of AI for common good — and diverse perspectives are vital to helping us get us there. Diversity, equity, and inclusion are non-negotiable tenets of our work and culture, and while we are proud of where we are, we know there is always more work to do.”

Peter Clark, Senior Research Director

Our commitment to diversity

At AI2, we are committed to fostering a diverse, inclusive environment within our institute, and to encourage these values in the wider research community. A diverse group of employees brings a variety of perspectives that encourage novel ideas and new approaches oriented at the data and challenges present in AI research.

Supporting a diverse team

AI2 has a Diversity, Equity, and Inclusion Council that is open to any team member from across the organization. We meet regularly and make active progress across several initiatives to support DEI both internally and externally.

For our team at AI2, we offer:

- A fair and employee-empowering review process

- Generous tuition and professional membership reimbursements

- Strong support for employees who need assistance attaining visa sponsorship

- Financial support for childcare requirements during business travel

- Paid parental and maternity leave for birth, adoption, or fostering

AI2 also supports DEI-related initiatives in the wider community

Shining a light on DEI challenges through research

AI2’s nonprofit status and unique mission of AI for the Common Good allow us to provide our team members the autonomy and support to pursue projects related to diversity and inclusion.

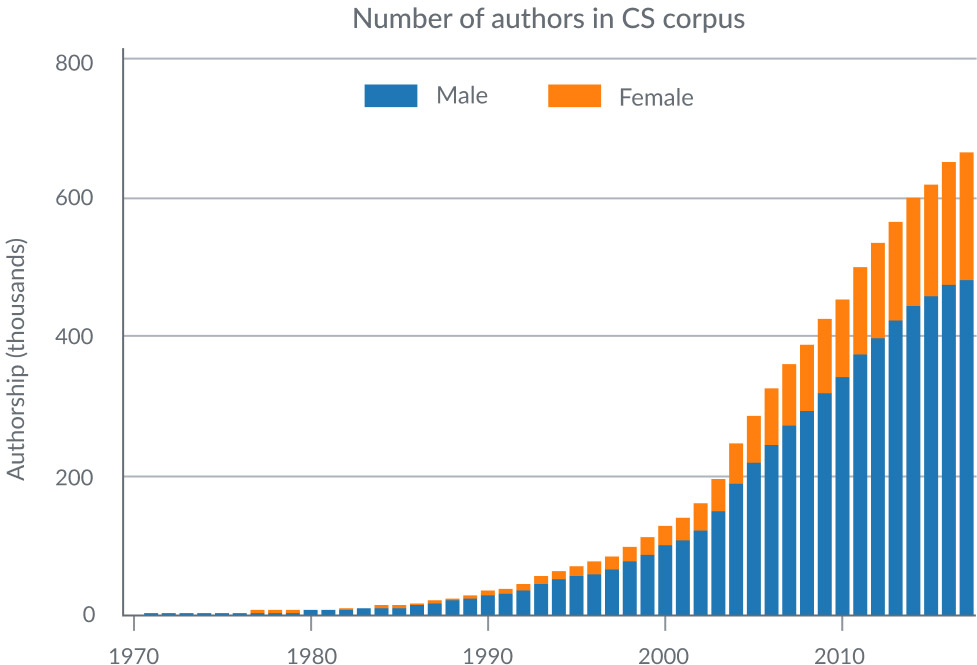

Gender Trends in Computer Science

AI2’s recent study Gender Trends in Computer Science Authorship by Lucy Lu Wang, Gabriel Stanovsky, Luca Weihs, and Oren Etzioni highlighted an important diversity gap in the computer science field. If current trends continue, the gender divide among authors publishing computer science research will not close for more than a century. Fair representation of women and other minorities is crucial to the future of the field, and we want to catalyze the conversation and inspire action by making findings like these known and by providing this important data to others interested in helping to foster equity in the field.

Adversarial Removal of Gender

Mark Yatskar, a Young Investigator at AI2, has published multiple works concerning gender bias and amplification in machine learning datasets, including Adversarial Removal of Gender from Deep Image Representations by Tianlu Wang, Jieyu Zhao, Mark Yatskar, Kai-Wei Chang, and Vicente Ordonez.

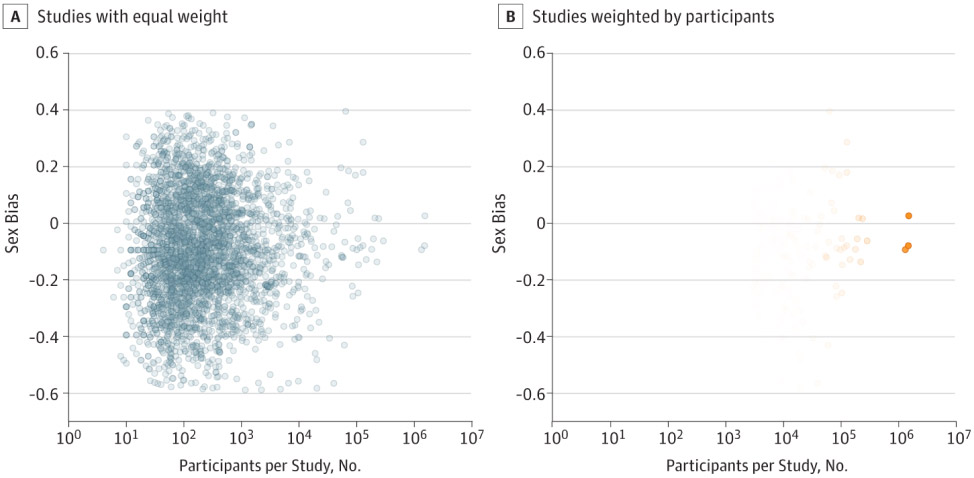

Quantifying Sex Bias

The Semantic Scholar team actively explores meaningful meta analyses of scientific literature, recently quantifying the problematic sex bias present in clinical studies in their study Quantifying Sex Bias in Clinical Studies at Scale With Automated Data Extraction by Sergey Feldman, Waleed Ammar, Kyle Lo, Elly Trepman, Madeleine van Zuylen, and Oren Etzioni.

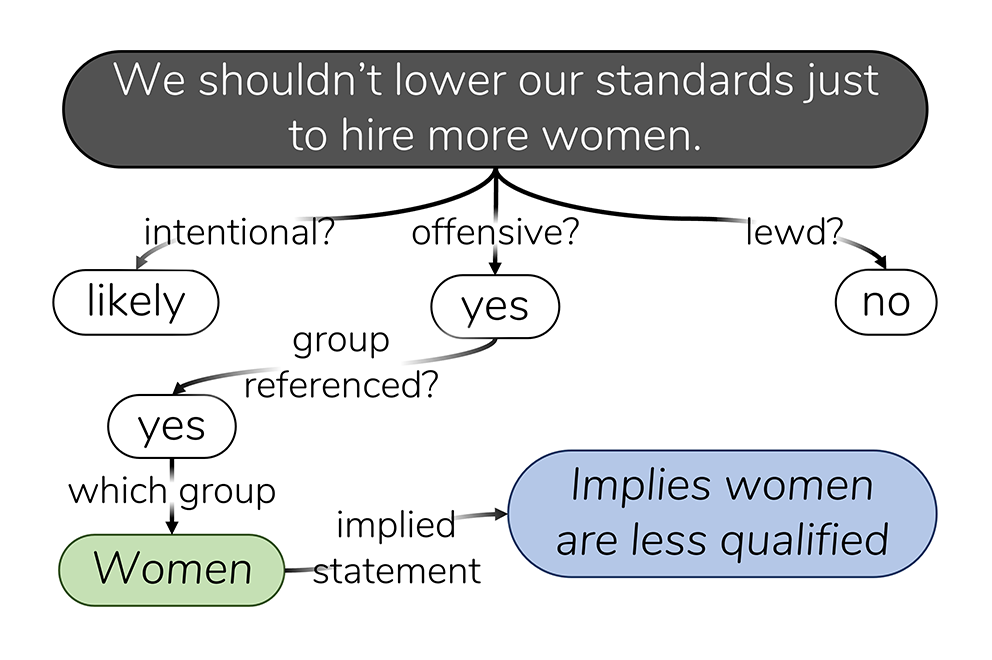

Fighting Biased and Toxic Language

Tackling the challenge of online toxicity requires careful thought, as biased or harmful implications can be subtle and depend heavily on context. Mosaic and AllenNLP team members are tackling these challenges, both by uncovering racial bias in existing hate speech detection tools and by designing new commonsense reasoning tasks to create more reliable and understandable biased language detection tools with explanations.